INTRODUCTION

Existing assessment instruments for evaluating performance in mastoidectomy are tedious and require extensive expert input, which may not always be available. Computer-based approaches have been employed to analyze instrument motion and, in turn, use motion as a proxy for skill. When coupled with artificial intelligence (AI) techniques, these approaches can be automated, making assessment more efficient, objective, and scalable.

Explore This Issue

April 2025Recent efforts have employed computer vision (CV) for automatically tracking instruments during mastoidectomy; however, these approaches have only been employed in post-hoc video analysis. Automated post-hoc analysis remains a time-consuming process that is temporally separated from the surgical experience and requires an asynchronous large data transfer.

Implementing motion analysis in real time could afford users immediate information on their surgical motion. Realizing the full potential of AI and CV in otolaryngology will require real-time integration of these tools. Although the body of CV applications in otolaryngology has grown recently, there have been no published descriptions of how to implement these tools for real-time use. To that end, we developed a CV approach for real-time drill motion analysis during mastoidectomy on a 3D-printed temporal bone (TB) and detailed the technical setup required for real-time implementation.

METHODS

Dataset Preparation

An image dataset was extracted from videos of mastoidectomies performed by the lead author on 3D-printed TBs. Videos were obtained using a high-definition camera (Karl Storz SE & Co KG, Tuttlingen, Germany) mounted to a Zeiss microscope (Carl Zeiss, Oberkochen, Baden-Württemberg, Germany). Frames were randomly extracted from the video dataset at 720 × 480 resolution using a custom Python script. Images in which a drill was not visualized were excluded. The drill burr was manually annotated with a bounding box in each image to create ground truth annotations. Ground truth annotations represent the true location of the object of interest. Object detection models are assessed by comparing model detections to ground truth annotations. Diamond and cutting drill burrs of variable sizes were included in the dataset.

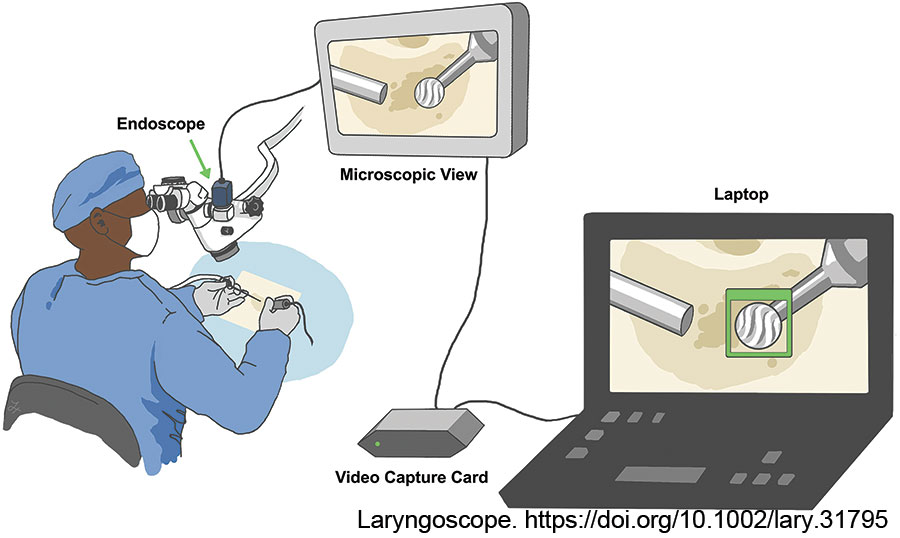

Figure 1: Illustration of the setup for real-time tracking. The live video data from the endoscope, which is mounted to the microscope, is transferred via the capture card into a laptop running the motion analysis model in real time (Illustration by Lucy Xu, MD). Laryngoscope. https://doi.org/10.1002/lary.31795

Two authors split and annotated half of the dataset to avoid bias. The senior author performed an independent review of a random subset of annotations from each author to ensure no discrepancies in annotation patterns. The dataset was divided into training and testing subsets, 80% and 20%, respectively. Our approach focused exclusively on drill detection, as the suction is often occluded by resin dust and poorly visualized in 3D-printed resin TB drilling.

Model Development and Evaluation

The training subset was passed into an open-source CV library, YOLOv8 (Ultralytics, https://github.com/ultralytics/ultralytics), to train an object detection model. The trained model was then tasked with detecting and localizing the drill burr in test data.

Model performance is assessed on the test image subset, which was not encountered during training. An object detection model aims to localize and appropriately classify the object of interest within an image. Performance is assessed on classification accuracy and precision of localization. We utilized common CV performance metrics of recall, intersection over union (IoU), average precision (AP), and mean average precision (mAP). Recall describes the model’s classification accuracy—that is, how likely the model is to predict a drill in an image that truly contains a drill. IoU, a threshold metric, represents the degree of overlap between ground truth and model-predicted bounding boxes. The AP of the model is a measure of how likely a predicted bounding box is to contain the same image data as the ground truth bounding box. AP is reported at a specified IoU threshold. mAP is given by averaging AP values across a series of IoU thresholds. We report our model’s mAP (0.5:0.95), a common CV metric, denoting an average of AP values across 10 IoU thresholds from 0.5 to 0.95 at 0.05 intervals.

The model was trained and evaluated in a private code notebook on Google Colaboratory, an online hosted computing service. Training and evaluation were powered by an NVIDIA Tesla T4 GPU.

Drill Tracking and Motion Analysis

In contrast to object detection, where an object is located in independent frames, object tracking involves the maintenance of an object’s identity across a sequence of frames (i.e., video). To track the motion of the drill, the detection model was coupled to a multi-object tracking algorithm, Boosted Simple Online and Realtime Tracking (BoT-SORT, https://github.com/NirAharon/BoT-SORT). BoT-SORT utilizes appearance matching between detections and motion filtering to maintain an object’s identity throughout a video stream.

Temporary drill occlusions, where the drill burr is partially obscured (i.e., by the suction), are overcome by tracking algorithms within BoT-SORT such that the drill can still be localized even if it is only partially in view. In each video frame, the model plots a bounding box around the predicted location of the drill burr and extracts the bounding box’s center coordinate. To analyze motion, center coordinates are implemented into kinematic equations. Motion metrics computed in our model include instrument velocity, distance traveled, smoothness of motion, and total task duration. These metrics were selected given their relationship to efficiency of motion, specifically within the context of mastoidectomy. Metrics are recorded cumulatively and individually for each drill burr type used.

Technical Setup for Real-Time Motion Analysis

The endoscope is mounted to the operating microscope and visualizes the microscopic view. The endoscope’s video feed, captured at 30 frames per second, is transferred via a DVI-HDMI cable to a video capture card, Elgato HD60X (Corsair Gaming, Milpitas, Calif.), which connects to a laptop running the motion analysis model (Fig. 1). The capture card effectively converts the microscopic view into a live video stream, which can be input, frame by frame, into the model.

The model runs locally on an M3 MacBook Pro (Apple Inc., Cupertino, Calif.), with inferencing powered by GPU via Apple Silicon Metal Performance Shaders.

This study protocol was deemed exempt by the Mass General Brigham Institutional Review Board (Protocol: 2020P003439).

RESULTS

A total of 18,483 frames were extracted from 15 mastoidectomy recordings. After removing frames without a drill, the final image dataset included 7,986 images. The training and testing subsets contained 6,387 and 1,597 images and associated annotations, respectively.

When evaluated on the test subset, the detection model achieved a recall of 98.4% and a mAP (0.5:0.95) of 81.5%.

In a mastoidectomy performed on a 3D-printed TB, the model could reliably analyze instrument motion in real time during the entirety of the procedure. In the described computing environment, the model took, on average, 30 milliseconds to localize the drill in each frame of the live video, amounting to minimal appreciable delay. When terminated by the user, the motion analysis model provided a summative feedback report detailing motion metrics and task duration.

Leave a Reply